As artificial intelligence (AI) becomes increasingly prevalent, an alarming number of people are using it to replace therapy, relationships and even authentic human interaction. AI relationships should not be pursued because they detract from the value of real social connections and make users lose confidence in their own decisions.

The prevalence of AI companions has been increasing at an alarming rate: 72% of teenagers have reported using such a chatbot, according to commonsensemedia. This figure represents an unfortunate decline in the value placed on authentic human connections.

In “Thus Spake Zarathustra,” the German philosopher Friedrich Nietzsche theorized the existence of a “last man,” an ultimate state of self-complacency that aims to negate the messy idiosyncrasies fundamental to life. AI usage embodies this concept, seeking to comfort the user instead of pushing them towards meaningful self-improvement.

“It’s very easy to fall into a sort of dependency with something that speaks like a human, but instead does everything to please you, reaffirm your thoughts,” said senior Keerthana Pillai.

Additionally, the use of AI as a substitute for human interaction often stems from loneliness or a desire for connection, according to Time. AI tends to be agreeable and undemanding, making it appealing to those who need a source of comfort.

However, because AI does not have feelings, wants or needs, people omit the effort required for a relationship. It is the genuine connection between people that makes a relationship meaningful, and disregarding that will undermine authentic interactions, ultimately leaving the user unfulfilled.

“With AI, there’s no effort on the part of the user to show up in a relationship,” Pillai said. “I think in a real relationship, [it’s] between two people, both parties need to contribute and to make it work. Both parties need to communicate. There can be disagreements, but that’s what makes it a human relationship… there’s just not that element there.”

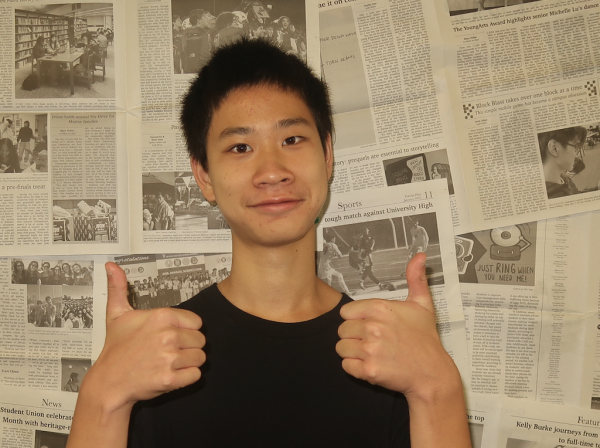

Overreliance on AI may also undermine independence, according to sophomore Preston Fletcher. Within an algorithm so centered around satisfying the user, affirmative feedback loops can make users lose confidence in their own thoughts and feelings when separated from AI.

“If you’re using [AI] for a relationship, that could take away serious parts of the human life,” said Fletcher. “[You could be] too scared to go talk to anyone else outside that relationship. It definitely strips away some rights that you have.”

Despite this, AI companions can be a viable outlet for loneliness in some cases. Interaction with AI companions has demonstrated the ability to reduce loneliness by up to 16%, according to the Wall Street Journal. Therefore, AI usage is not wholly detrimental and may have some feasible use in mitigating loneliness.

However, even as AI companions may reduce loneliness, they provide no meaningful solution to a long-term social problem. In fact, they may even exacerbate individual problems by failing to recognize or even encouraging suicide, according to Stanford University. Even while loneliness may be reduced, there still remains a real problem regarding the ethics and boundaries in human conversation that AI companions may choose to disregard.

As AI becomes increasingly integrated into daily life, it is important to remember the virtue of authenticity in shared human experiences. Although AI relationships may provide temporary comfort, they will never match the sincerity nor originality of a human relationship. Instead of turning to AI, people should try to form authentic connections with others — connections that seek to both comfort and challenge during difficult times. By doing so, they will gain fulfillment far superior to what any chatbot could provide.